Help with running a sequential model across multiple GPUs, in order to make use of more GPU memory - PyTorch Forums

Imbalanced GPU memory with DDP, single machine multiple GPUs · Discussion #6568 · PyTorchLightning/pytorch-lightning · GitHub

deep learning - Pytorch: How to know if GPU memory being utilised is actually needed or is there a memory leak - Stack Overflow

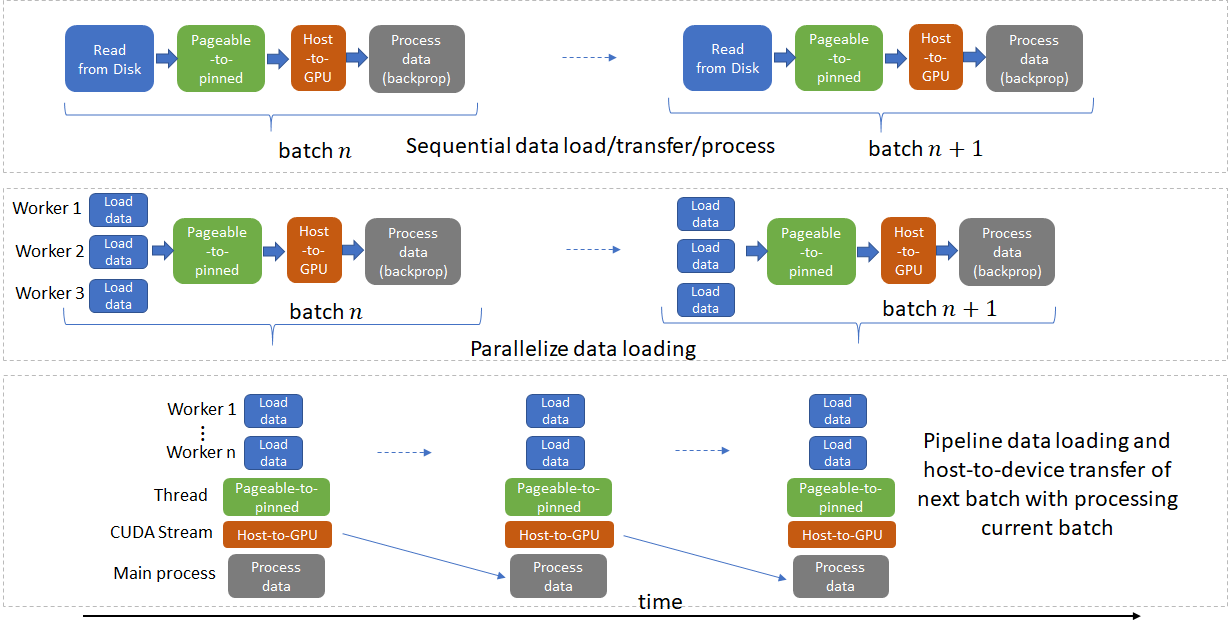

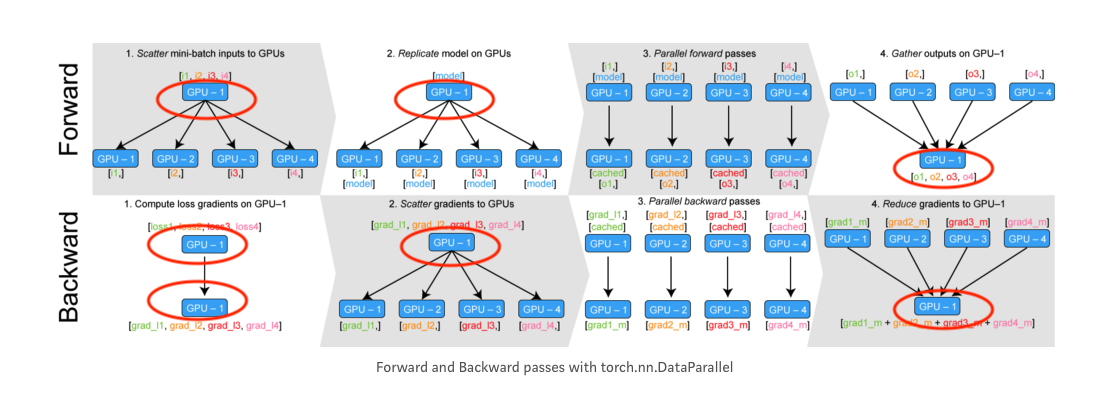

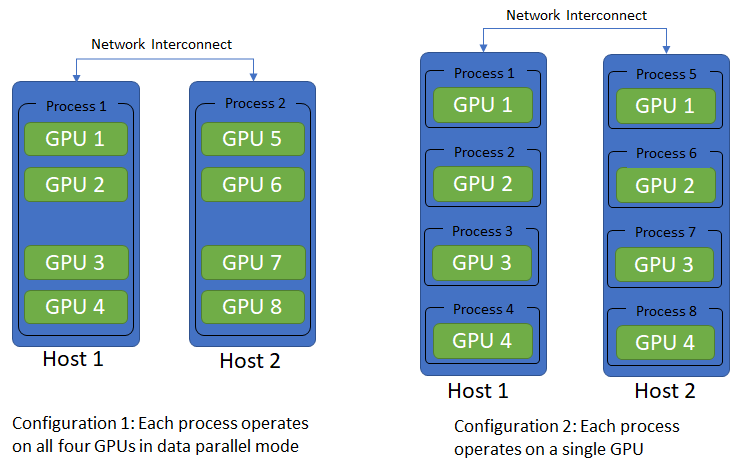

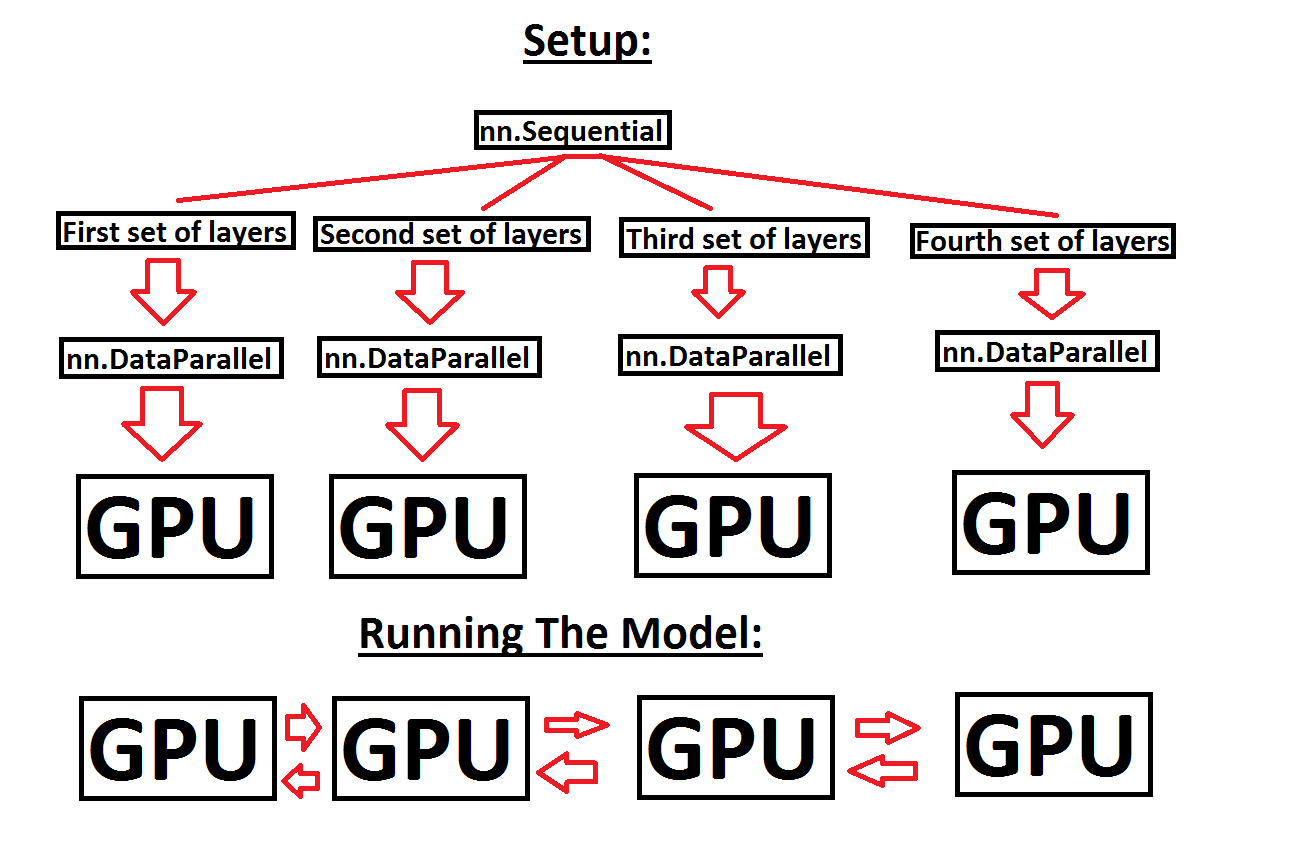

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

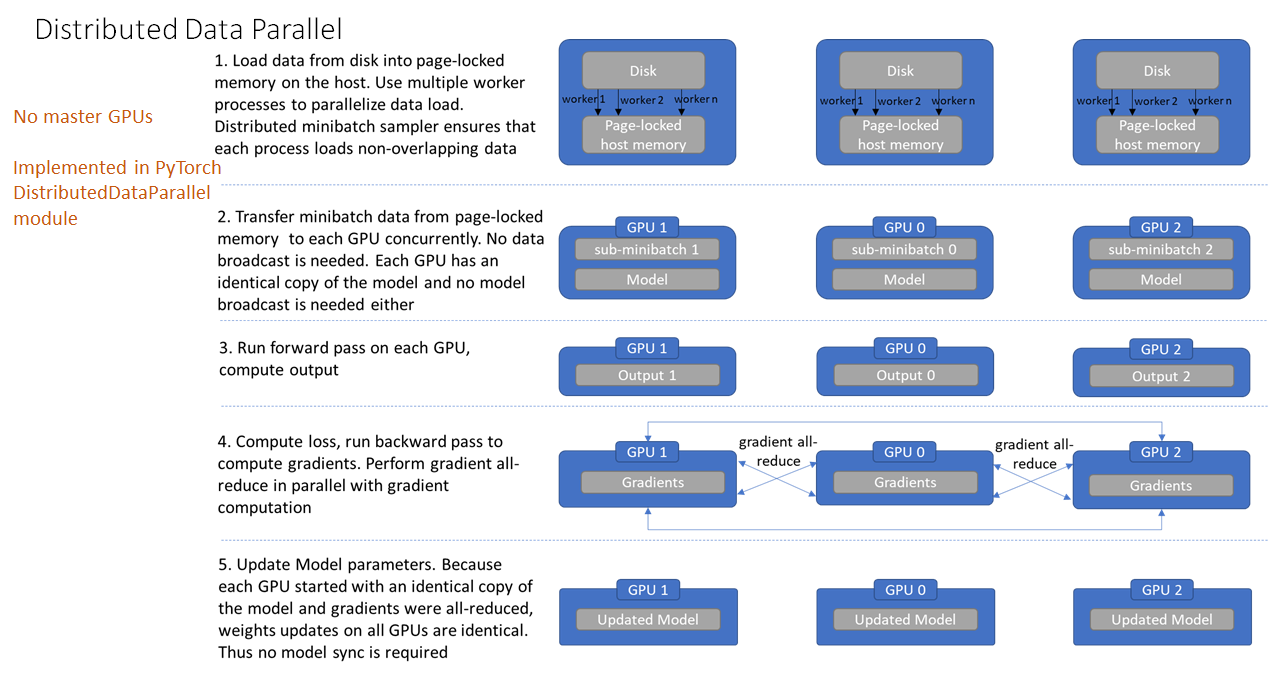

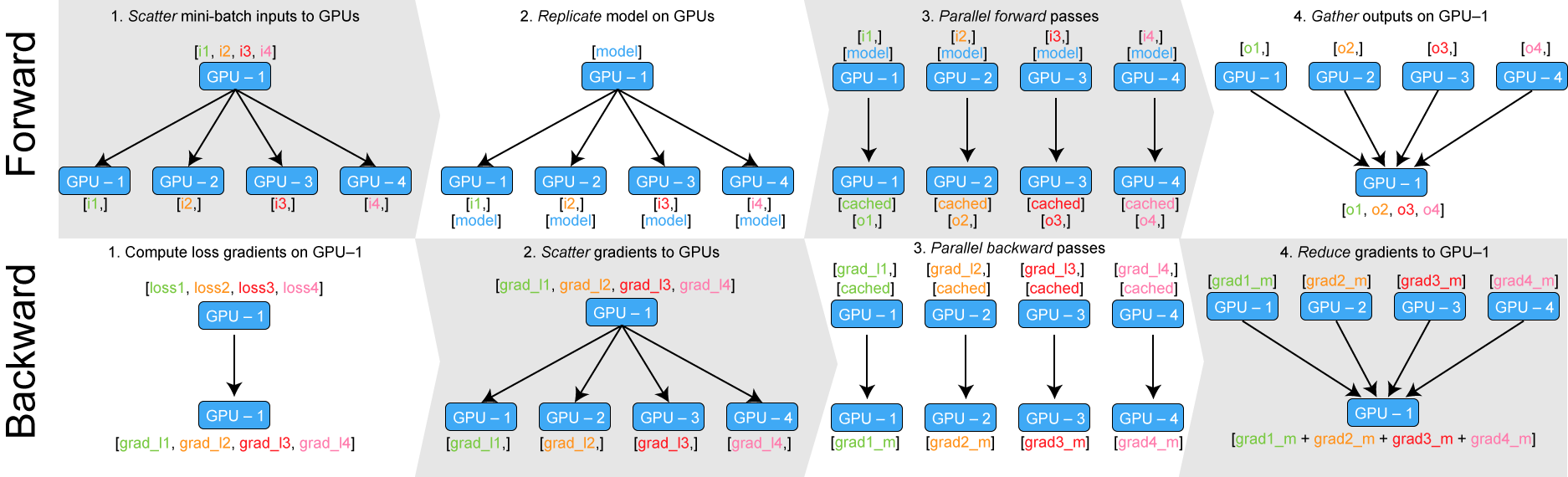

Training Memory-Intensive Deep Learning Models with PyTorch's Distributed Data Parallel | Naga's Blog

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium